What Are Large Language Models?

Large language models are a category of artificial intelligence systems designed to understand and generate human-like text by learning from vast amounts of data. Unlike traditional rule-based language programs, LLMs utilize deep learning techniques and extensive neural networks to predict and produce coherent language based on context.

Key Characteristics of Large Language Models

- Scale: LLMs are characterized by having billions or even trillions of parameters, making them significantly larger than earlier models.

- Contextual Understanding: They analyze the context within sentences and paragraphs to generate relevant and meaningful responses.

- Pre-training and Fine-tuning: These models undergo pre-training on vast datasets and can be fine-tuned for specific tasks or industries.

- Generative Capabilities: LLMs can produce original text, translate languages, summarize information, and answer questions with remarkable accuracy.

The Architecture Behind Large Language Models

The backbone of most modern large language models is the transformer architecture, introduced in 2017 by Vaswani et al. This design revolutionized natural language processing by enabling models to handle long-range dependencies in text efficiently.

Understanding the Transformer Model

- Self-Attention Mechanism: Allows the model to weigh the importance of different words within a sentence, capturing nuanced relationships.

- Encoder-Decoder Structure: In some LLMs, the encoder processes input text, while the decoder generates output, facilitating tasks like translation.

- Layered Neural Networks: Multiple layers of attention and feed-forward networks enable deep contextual learning.

Examples of transformer-based LLMs include GPT (Generative Pre-trained Transformer), BERT (Bidirectional Encoder Representations from Transformers), and their numerous variants.

How Large Language Models Are Trained

Training large language models involves exposing them to massive datasets comprising diverse text from books, articles, websites, and other sources. This process can be broken down into several stages:

1. Data Collection and Preprocessing

- Gathering extensive and varied textual data to ensure broad language coverage.

- Cleaning and organizing data to remove noise, duplicates, and irrelevant content.

2. Pre-training

- Models learn to predict the next word or fill in missing words in sentences, developing a general understanding of language patterns.

- This phase requires substantial computational resources, often leveraging powerful GPUs or TPUs over weeks or months.

3. Fine-tuning

- Adapting the pre-trained model to specific tasks such as sentiment analysis, question answering, or translation.

- Fine-tuning improves performance in targeted applications by training on smaller, domain-specific datasets.

Applications of Large Language Models

Large language models have a wide range of applications across industries, demonstrating their versatility and impact.

Common Use Cases

- Natural Language Understanding: Enhancing chatbots and virtual assistants to comprehend user queries better.

- Content Creation: Automating writing tasks, including articles, reports, and creative storytelling.

- Translation Services: Providing accurate and fluent translations across multiple languages.

- Sentiment Analysis: Evaluating customer feedback and social media posts to gauge public opinion.

- Educational Tools: Facilitating language learning and tutoring through personalized interactions.

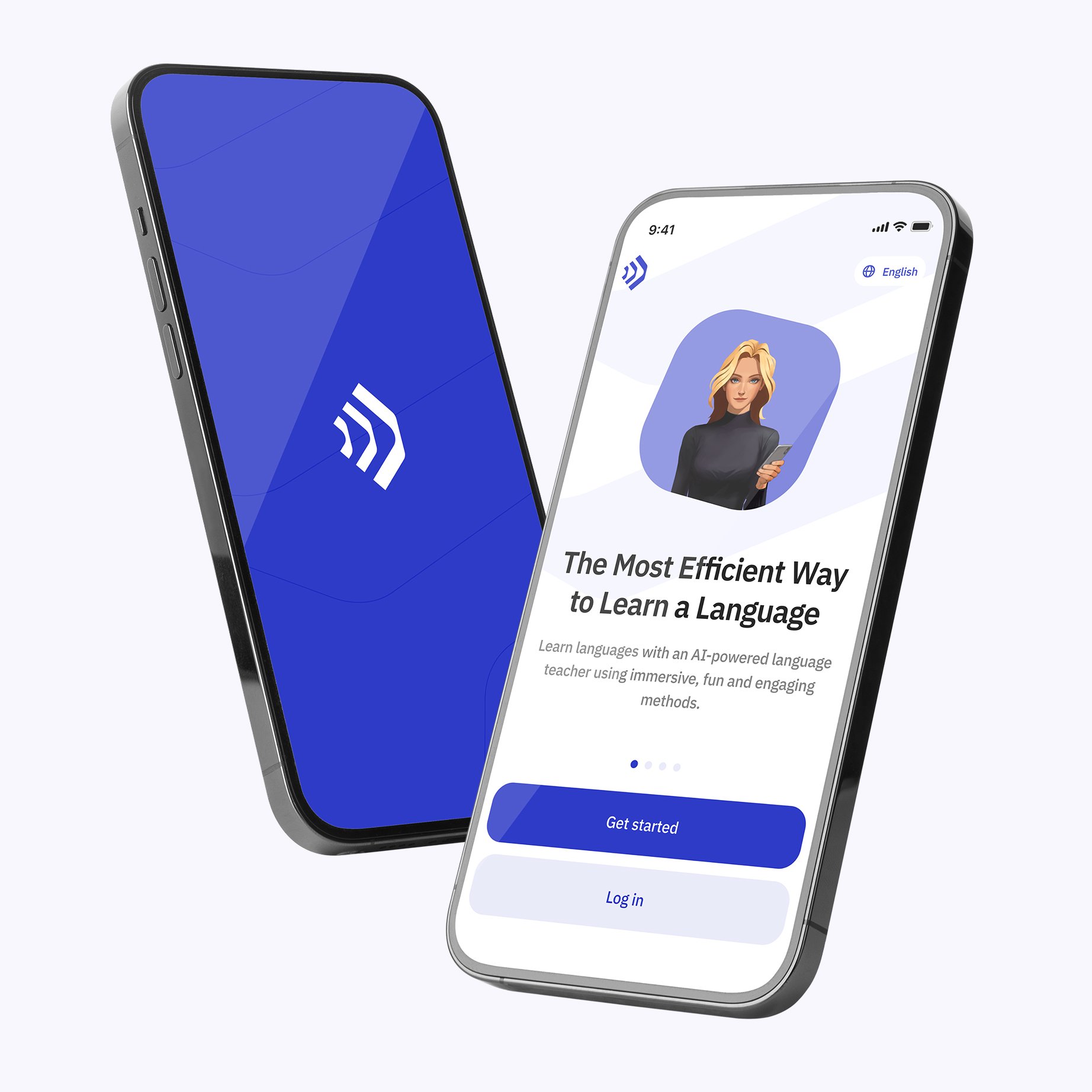

Platforms like Talkpal leverage large language models to create immersive and interactive language learning experiences, enabling learners to practice conversation and comprehension in a realistic setting.

Challenges and Ethical Considerations

Despite their impressive capabilities, large language models present several challenges and ethical concerns that must be addressed.

Technical Challenges

- Computational Costs: Training and deploying LLMs require significant energy and hardware resources.

- Bias and Fairness: Models may inherit biases present in training data, leading to unfair or harmful outputs.

- Interpretability: Understanding how LLMs arrive at specific outputs remains complex.

Ethical Concerns

- Misuse Potential: LLMs can generate misleading information, deepfakes, or spam.

- Privacy Issues: Training data may inadvertently include sensitive information.

- Impact on Employment: Automation of language-based tasks may affect jobs in content creation and customer service.

The Future of Large Language Models

The trajectory of large language models points toward even greater sophistication and integration into daily life. Researchers are focusing on:

- Efficiency Improvements: Developing smaller, more efficient models that require less computational power.

- Multimodal Models: Combining text with images, audio, and video for richer understanding.

- Enhanced Personalization: Tailoring language models to individual user needs and preferences.

- Robustness and Safety: Creating models that are more reliable and less prone to errors or harmful outputs.

By engaging with platforms like Talkpal, learners can stay at the forefront of these advancements, gaining practical knowledge and skills in AI-driven language technologies.

Why Talkpal Is Ideal for Learning About Large Language Models

Talkpal offers an interactive and user-friendly environment for exploring the concepts and applications of large language models. Its features include:

- Real-Time Conversations: Practice with AI-powered chatbots that simulate natural dialogue.

- Comprehensive Learning Resources: Access tutorials, examples, and explanations tailored to different proficiency levels.

- Personalized Feedback: Receive guidance to improve your understanding and usage of language models.

- Community Support: Engage with fellow learners and experts to share insights and experiences.

By integrating Talkpal into your learning journey, you can gain a solid foundation in the principles and practicalities of large language models, preparing you for the evolving landscape of AI-powered communication.

Conclusion

Large language models represent a transformative leap in artificial intelligence, enabling machines to process and generate human language with unprecedented fluency and versatility. Understanding their architecture, training, and applications is essential for anyone interested in the future of technology and communication. Utilizing platforms like Talkpal provides an effective and engaging way to learn about these models, helping you unlock the potential of AI-driven language tools. As the field continues to evolve, mastering the basics of large language models will empower you to participate in shaping the next generation of intelligent systems.